4. makemore (part 3): activations & gradients, batchnorm#

import sys

IN_COLAB = "google.colab" in sys.modules

if IN_COLAB:

print("Cloning repo...")

!git clone --quiet https://github.com/ckaraneen/micrograduate.git > /dev/null

%cd micrograduate

print("Installing requirements...")

!pip install --quiet uv

!uv pip install --system --quiet -r requirements.txt

Intro#

Here, we will continue our implementation of makemore. In the previous lesson, we implemented an character-level language model using a mlp along the lines of Bengio et al. 2003. The model took as inputs a few past characters and predicted the next character in the sequence. What we would like to do is move on to more complex and larger nns, like

RNN, following Mikolov et al. 2010

LSTM, following Graves et al. 2014

GRU, following Kyunghyun Cho et al. 2014

CNN, following Oord et al., 2016

Transformer, following Vaswani et al. 2017

But before we do so, let’s stick around at the level of the mlp for a little longer in order to develop an intuitive understanding of the activations during training, and especially the gradients flowing backwards: how they behave and how they look like. This is important for understanding the history of the development of newer architectures. Because, RNNs, as we’ll see, for example, although they are very expressive, are universal function approximators and can in principle implement all algorithms, we will see that they are not that easily optimizable with the first-order gradient-based techniques that we have available to us and that we use all the time. The key to understanding why they are not easily optimizable, is to understand the activations and the gradients and how they behave during training. What we’ll also see is that a lot of variants since RNNs, have tried to improve upon this situation. And so, that’s the path that we have to take.

Rebuilding mlp#

So, let’s get started by first building on the code from the previous lesson.

import random

random.seed(42)

import torch

import torch.nn.functional as F

import matplotlib.pyplot as plt

if IN_COLAB:

%matplotlib inline

else:

%matplotlib ipympl

SEED = 2147483647

# read in all the words

words = open("names.txt", "r").read().splitlines()

words[:8]

['emma', 'olivia', 'ava', 'isabella', 'sophia', 'charlotte', 'mia', 'amelia']

len(words)

32033

# build the vocabulary of characters and mappings to/from integers

chars = sorted(list(set("".join(words))))

ctoi = {s: i + 1 for i, s in enumerate(chars)}

ctoi["."] = 0

itoc = {i: s for s, i in ctoi.items()}

vocab_size = len(itoc)

print(itoc)

print(vocab_size)

{1: 'a', 2: 'b', 3: 'c', 4: 'd', 5: 'e', 6: 'f', 7: 'g', 8: 'h', 9: 'i', 10: 'j', 11: 'k', 12: 'l', 13: 'm', 14: 'n', 15: 'o', 16: 'p', 17: 'q', 18: 'r', 19: 's', 20: 't', 21: 'u', 22: 'v', 23: 'w', 24: 'x', 25: 'y', 26: 'z', 0: '.'}

27

block_size = 3

def build_dataset(words):

x, y = [], []

for w in words:

context = [0] * block_size

for ch in w + ".":

ix = ctoi[ch]

x.append(context)

y.append(ix)

context = context[1:] + [ix] # crop and append

x = torch.tensor(x)

y = torch.tensor(y)

print(x.shape, y.shape)

return x, y

random.shuffle(words)

n1 = int(0.8 * len(words))

n2 = int(0.9 * len(words))

xtrain, ytrain = build_dataset(words[:n1])

xval, yval = build_dataset(words[n1:n2])

xtest, ytest = build_dataset(words[n2:])

torch.Size([182625, 3]) torch.Size([182625])

torch.Size([22655, 3]) torch.Size([22655])

torch.Size([22866, 3]) torch.Size([22866])

def define_nn(

n_hidden=200, n_embd=10, w1_factor=1.0, b1_factor=1.0, w2_factor=1.0, b2_factor=1.0

):

global C, w1, b1, w2, b2

g = torch.Generator().manual_seed(SEED)

C = torch.randn((vocab_size, n_embd), generator=g)

w1 = torch.randn(n_embd * block_size, n_hidden, generator=g) * w1_factor

b1 = torch.randn(n_hidden, generator=g) * b1_factor

w2 = torch.randn(n_hidden, vocab_size, generator=g) * w2_factor

b2 = torch.randn(vocab_size, generator=g) * b2_factor

parameters = [C, w1, b1, w2, b2]

print(sum(p.nelement() for p in parameters))

for p in parameters:

p.requires_grad = True

return parameters

def forward(x, y):

emb = C[x]

embcat = emb.view(emb.shape[0], -1)

hpreact = embcat @ w1 + b1 # hidden layer pre-activation

h = torch.tanh(hpreact)

logits = h @ w2 + b2

loss = F.cross_entropy(logits, y)

return hpreact, h, logits, loss

def backward(parameters, loss):

for p in parameters:

p.grad = None

loss.backward()

def update(parameters, lr):

for p in parameters:

p.data += -lr * p.grad

def train(x, y, initial_lr=0.1, maxsteps=200000, batchsize=32, redefine_params=False):

global parameters

lossi = []

if redefine_params:

parameters = define_nn()

for p in parameters:

p.requires_grad = True

for i in range(maxsteps):

bix = torch.randint(0, x.shape[0], (batchsize,))

xb, yb = x[bix], y[bix]

hpreact, h, logits, loss = forward(xb, yb)

backward(parameters, loss)

lr = initial_lr if i < 100000 else initial_lr / 10

update(parameters, lr=lr)

# track stats

if i % 10000 == 0: # print every once in a while

print(f"{i:7d}/{maxsteps:7d}: {loss.item():.4f}")

lossi.append(loss.log10().item())

return hpreact, h, logits, lossi

@torch.no_grad() # this decorator disables gradient tracking

def print_loss(x, y, prefix=""):

_, _, _, loss = forward(x, y)

print(f"{prefix} {loss}")

return loss

parameters = define_nn()

_, _, _, lossi = train(xtrain, ytrain)

print_loss(xtrain, ytrain, prefix="train")

print_loss(xval, yval, prefix="val");

11897

0/ 200000: 26.9154

10000/ 200000: 3.1635

20000/ 200000: 2.6757

30000/ 200000: 2.0344

40000/ 200000: 2.6388

50000/ 200000: 2.0378

60000/ 200000: 2.8707

70000/ 200000: 2.1878

80000/ 200000: 2.1205

90000/ 200000: 2.1872

100000/ 200000: 2.6178

110000/ 200000: 2.4887

120000/ 200000: 1.6539

130000/ 200000: 2.2179

140000/ 200000: 2.2891

150000/ 200000: 1.9963

160000/ 200000: 1.7527

170000/ 200000: 1.7564

180000/ 200000: 2.2431

190000/ 200000: 2.2670

train 2.13726806640625

val 2.1725592613220215

plt.figure()

plt.plot(lossi)

[<matplotlib.lines.Line2D at 0x7f8a75ea0c50>]

def sample_from_model():

# sample from the model

g = torch.Generator().manual_seed(SEED + 10)

for _ in range(20):

out = []

context = [0] * block_size # initialize with all ...

while True:

emb = C[torch.tensor([context])] # (1,block_size,d)

h = torch.tanh(emb.view(1, -1) @ w1 + b1)

logits = h @ w2 + b2

probs = F.softmax(logits, dim=1)

ix = torch.multinomial(probs, num_samples=1, generator=g).item()

context = context[1:] + [ix]

out.append(ix)

if ix == 0:

break

print("".join(itoc[i] for i in out))

sample_from_model()

eria.

kayanniee.

med.

ryah.

rethrus.

jernee.

aderedielin.

shi.

jen.

eden.

estana.

selyn.

malyan.

nyshabergiagriel.

kinleeney.

panthuon.

ubz.

geder.

yarue.

elsy.

So that’s our starting point. Awesome!

Dealing with bad weights#

Now, the first thing to scrutinize is the initialization. An experienced person would tell you that our network is very improperly configured at initialization and there are multiple things wrong with it. Let’s start with the first one. If you notice the loss at iteration 0/200000, it is rather high. This rapidly comes down to \(2\) or so in the following training iterations. But you can tell that initialization is all messed up just by an initial loss that is way too high. In the training of nns, it is almost always the case that you’ll have a rough idea of what loss to expect at initialization. And that just depends on the loss function and the problem setup. In our case, we expect a number much lower than what we get. Let’s calculate it together. Basically, there’s \(27\) characters that can come next for any one training example. At initialization, we have no reason to believe that any characters to be much more likely than others. So, we’d expect that the probability distribution that comes out initially is a uniform distribution, assigning about-equal probability to all the \(27\) characters. This means that what we’d like the ideal probability we should record for any character coming next to be:

ideal_p = torch.tensor(1.0 / 27)

ideal_p.item()

0.03703703731298447

And then the loss we would expect is the negative log probability:

expected_loss = -torch.log(ideal_p)

expected_loss.item()

3.295836925506592

So what’s happening right now is that at initialization the network is creating probability distributions that are all messed up. Some characters are very confident and some characters are very not-confident. Basically, the network is very confidently wrong and that’s what makes it record a very high loss. For simplicity, let’s see a smaller, \(4\)-dimensional example of the issue, by assuming we only have \(4\) characters.

def logits_4d(logits=torch.tensor([0.0, 0.0, 0.0, 0.0]), index=0):

probs = torch.softmax(logits, dim=0)

loss = -probs[index].log()

return probs, loss.item()

logits_4d()

(tensor([0.2500, 0.2500, 0.2500, 0.2500]), 1.3862943649291992)

Suppose we have logits that come out of an nn that are all \(0\). Then, when we calculate the softmax of these logits and get probabilities that are a diffused distribution that sums to \(1\) and is exactly uniform. Whereas, the loss we get is the loss we would expect for a \(4\)-dimensional example with a uniform probability distribution. And so it doesn’t matter whether the index is \(0\), \(1\), \(2\) or \(3\). We’ll see of course that as we start to manipulate these logits, the loss changes. For example:

logits_4d(logits=torch.tensor([0.0, 0.0, 5.0, 0.0]), index=2)

(tensor([0.0066, 0.0066, 0.9802, 0.0066]), 0.020012274384498596)

Yields a very low loss since we are assigning the correct probability at initialization to the correct (3rd) label. Much more likely it is that some other dimension will have a high logit, e.g.

logits_4d(logits=torch.tensor([0.0, 5.0, 0.0, 0.0]), index=2)

(tensor([0.0066, 0.9802, 0.0066, 0.0066]), 5.020012378692627)

and then what happens is we start to record a much higher loss. So, what of course can happens is that the logits might take on extreme values and come out like this:

logits_4d(logits=torch.tensor([-3.0, 5.0, 0.0, 2.0]), index=2)

(tensor([3.1741e-04, 9.4620e-01, 6.3754e-03, 4.7108e-02]), 5.055302619934082)

which also leads to a very high loss. For example, if logits are be relatively close to \(0\), the loss is not too big. For example:

randn_logits = torch.randn(4)

print(randn_logits)

logits_4d(logits=randn_logits, index=2)

tensor([ 0.6490, 0.7479, -0.3871, -0.5356])

(tensor([0.3617, 0.3993, 0.1284, 0.1106]), 2.0529625415802)

However, if they are larger, it’s very unlikely that you are going to be guessing the correct bucket and so you’d be confidently wrong and usually record a very high loss:

big_randn_logits = torch.randn(4) * 10

print(big_randn_logits)

logits_4d(logits=big_randn_logits, index=3)

tensor([ 9.4665, 7.1429, 2.0826, -18.9976])

(tensor([9.1030e-01, 8.9138e-02, 5.6545e-04, 3.9573e-13]), 28.55805015563965)

For even more extreme logits, you might get extreme loss values:

huge_randn_logits = torch.randn(4) * 100

print(huge_randn_logits)

logits_4d(logits=huge_randn_logits, index=1)

tensor([ 8.3889, -112.5325, -85.9192, -154.8166])

(tensor([1.0000e+00, 0.0000e+00, 1.1028e-41, 0.0000e+00]), inf)

Basically, such logits are not good and we want the logits to be roughly \(0\) when the network is initialized. In fact, the logits don’t need to be zero, they just have to be equal, e.g.:

logits_4d(logits=torch.tensor([3., 3., 3., 3.]), index=2)

(tensor([0.2500, 0.2500, 0.2500, 0.2500]), 1.3862943649291992)

Because of the normalization inside softmax, this will actually come out ok. But, for symmetry, we don’t want it to be any arbitrary positive or negative number, just zero. So let’s now concretely see where things go wrong in our initial example. First, let’s reinitialize our network:

parameters = define_nn()

11897

Then let’s train it only for one step:

_, _, logits, _ = train(xtrain, ytrain, maxsteps=1)

0/ 1: 29.0502

If we print the logits, we’ll see that they take on quite extreme values:

logits[0]

tensor([ 1.8587e+01, -8.8322e+00, -4.3481e+00, 9.0512e+00, 2.1609e+00,

5.8525e+00, -1.5959e+01, 1.7691e-01, 1.4306e+01, 5.5933e+00,

-1.0145e+01, -2.8939e+00, -2.0576e+01, 1.0257e+01, 1.2336e+01,

-1.0782e+01, -3.0768e+01, -4.1870e+00, -1.3147e-02, 2.1114e+01,

-6.2802e+00, -5.8485e+00, -9.8297e-01, 2.2049e+01, -4.3106e+00,

1.4430e+01, -6.5003e+00], grad_fn=<SelectBackward0>)

which is what is creating the fake confidence and why the loss is so high. Let’s now try to scale down the values of the some of our parameters (e.g. w2) and retrain for a step:

parameters = define_nn(w2_factor=0.1, b2_factor=0.0)

_ = train(xtrain, ytrain, maxsteps=1)

11897

0/ 1: 4.3367

Aha! The loss is lower, which makes sense. Let’s try a smaller factor:

parameters = define_nn(w2_factor=0.01, b2_factor=0.0)

_ = train(xtrain, ytrain, maxsteps=1)

11897

0/ 1: 3.2853

The loss decreases further. Alright, so we’re getting closer and closer… So, you might ask, why not just initialize the weights to \(0.0\)?

parameters = define_nn(w2_factor=0.0, b2_factor=0.0)

_ = train(xtrain, ytrain, maxsteps=1)

11897

0/ 1: 3.2958

Besides, it does yield an acceptable initial loss value. Well, you don’t want to be setting the parameters of a nn exactly to \(0\). You usually want it to be small numbers instead of exactly \(0\). Let’s see soon where things might go wrong if we set the initial parameters to \(0\). For now, let’s just consider the \(0.01\) factor, which yields a small-enough initial loss:

parameters = define_nn(w2_factor=0.01, b2_factor=0.0)

_, _, logits, _ = train(xtrain, ytrain, maxsteps=1)

11897

0/ 1: 3.3213

The logits are now coming out as closer to \(0\):

logits[0]

tensor([ 0.0719, 0.0493, -0.2910, 0.0210, 0.2192, 0.0624, 0.2226, 0.2487,

0.1420, 0.1322, 0.0790, -0.0102, -0.0382, 0.1264, 0.0133, -0.0155,

0.0955, -0.1007, 0.0885, 0.0645, 0.0264, 0.1433, 0.0642, -0.1751,

-0.0414, -0.1055, -0.1209], grad_fn=<SelectBackward0>)

Cool. So, let’s now train the network completely, and see what losses we get.

parameters = define_nn(w2_factor=0.01, b2_factor=0.0)

_, _, _, lossi = train(xtrain, ytrain)

11897

0/ 200000: 3.2738

10000/ 200000: 2.2549

20000/ 200000: 2.1941

30000/ 200000: 2.0484

40000/ 200000: 2.1231

50000/ 200000: 2.3176

60000/ 200000: 1.9067

70000/ 200000: 2.3038

80000/ 200000: 2.2954

90000/ 200000: 2.3491

100000/ 200000: 2.4612

110000/ 200000: 1.8579

120000/ 200000: 1.8499

130000/ 200000: 2.0158

140000/ 200000: 2.2136

150000/ 200000: 1.8362

160000/ 200000: 1.7483

170000/ 200000: 1.9169

180000/ 200000: 2.3354

190000/ 200000: 2.1250

plt.figure()

plt.plot(lossi)

[<matplotlib.lines.Line2D at 0x7f8a77193950>]

print_loss(xtrain, ytrain, prefix="train")

print_loss(xval, yval, prefix="val")

train 2.068324327468872

val 2.128187656402588

tensor(2.1282)

The loss gets smaller after the first step. Now, notice that our loss plot does not have the previous loss plot’s hockey stick appearance. The reason is that that shape came from the optimization process basically squashing down the weights to a much smaller range than the initial one. But, now since we’ve already initialized the weights with small values, no such significant shrinking takes places, and thus no big loss drop happens between the first couple training steps. Therefore, we are not getting any easy gains, as we previously did in the beginning, but only just the hard gains from training. One important point to keep in mind is that the training and validation losses are now a bit better, since training now goes on for a bit longer, since the first epochs are no longer spent for squashing the parameters.

Dealing with dead neurons#

Now, time to deal with a second problem. Although our loss after initializing with smaller weights is low:

parameters = define_nn(w2_factor=0.01, b2_factor=0.0)

hpreact, h, logits, _ = train(xtrain, ytrain, maxsteps=1)

11897

0/ 1: 3.3179

the activation variable contains many \(1.0\) and \(-1.0\) values:

h

tensor([[-1.0000, 0.9604, -0.1418, ..., -0.1266, 1.0000, 1.0000],

[-1.0000, 0.4796, -0.9999, ..., -0.9951, 0.9976, 1.0000],

[-0.9999, -0.1726, -1.0000, ..., -0.9927, 1.0000, 1.0000],

...,

[ 0.0830, -0.9999, 0.9990, ..., -0.7998, 0.9251, 1.0000],

[-1.0000, 0.9604, -0.1418, ..., -0.1266, 1.0000, 1.0000],

[-1.0000, -0.9665, -1.0000, ..., -0.9994, 0.9962, 0.9919]],

grad_fn=<TanhBackward0>)

Now, h is the result of the \(tanh\) activation function which is basically a squashing function that maps values within the \([-1.0, 1.0]\) range. To get an idea of the distribution of the values of h, let’s look at its histogram.

plt.figure()

plt.hist(h.view(-1).tolist(), 50);

We clearly see that most of the values of h are either \(-1.0\) or \(1.0\). So, this \(tanh\) is very very active. We can look at why that is by plotting the pre-activations that feed into the \(tanh\):

plt.figure()

plt.hist(hpreact.view(-1).tolist(), 50);

And we can see that the distribution of the preactivations is very very broad, with numbers between \(-20\) and around \(20\). That is why in the \(tanh\) output values of \(h\), everything is being squashed and capped to be in the \([-1.0, 1.0]\) range, with many extreme \(-1.0\) and \(1.0\) values. If you are new to nns, you might not see this as an issue. But if you’re well-versed in the dark arts of backprop and have an intuitive sense of how these gradients flow through a nn, you are looking at how the \(tanh\) values are distributed and you are sweating! Either case, let’s see why this is an issue. First and foremost, we have to keep in mind that during backprop, we do a backward pass by starting at the loss and flowing through the network backwards. In particular, we get to a point where we backprop through the \(tanh\) function. If you scroll up to the forward() function, you’ll see that the layer we first backprop through is the hidden nn layer (with parameters w2, b2), with n_hidden number of neurons, that implements an element-wise \(tanh\) non-linearity. Now, let’s look at what happens in \(tanh\) in the backward pass. We can actually go back to our very first micrograd implementation, in the first notebook and see how we implement \(tanh\). This is how the gradient of \(tanh\) is calculated: \((1 - t^2) \cdot \dfrac{\partial L}{\partial out}\). If the value of \(t\), the output of \(tanh\) is \(0\), then the \(tanh\) neuron is basically inactive and the gradient of the previous layer just passes through. Whereas, if \(t\) is \(-1\) or \(+1\), then the gradient becomes \(0\). This means that if most of the h values (outputs of \(tanh\)) are close to the flat \(-1\) and \(+1\) regions of the \(tanh\) output value range, then the gradients that are flowing through the network are getting destroyed at this layer: an unwanted side-effect. Let’s further investigate the amount of h activation values that are concentrated at the flat regions:

plt.figure(figsize=(20, 10))

plt.imshow(h.abs() > 0.99, cmap="gray", interpolation="nearest");

What we see in this data display are each one of the \(200\) neurons (columns) per each of the \(32\) examples/batches (rows). A white pixel represents a neuron whose output is in the flat \(tanh\) region: either \(-1\) or \(+1\). Whereas, a black pixel represents a neuron whose output is in-between those flat region values. In other words, the white neurons are all the maximum-valued neurons that block the flow of gradients during backprop. Of course, we would be in grave trouble if for all of these \(200\) neurons in each column (across all batches) were white. Because in that case we would have what we call a dead neuron. This would be a case wherein the initialization of weights and biases is such that no single example (batch) ever activates a neuron in the active region of the \(tanh\) value range, in between the flat, saturated regions. Since our display does not contain any column of all-whites, for each neuron of our nn, there are least one or a couple of neurons that activate in the active region, meaning that some gradients will flow through and each neuron will learn. Nevertheless, cases of dead neurons are possible and the way this manifests (e.g. for \(tanh\) neurons) is that no matter what inputs you plug in from your dataset, these dead neurons only fire either completely \(+1\) or completely \(-1\) and then these neurons will just not learn, because all the gradients will be zeroed out. These scenarios are not only true for \(tanh\), but for many other non-linearities that people use in nns.

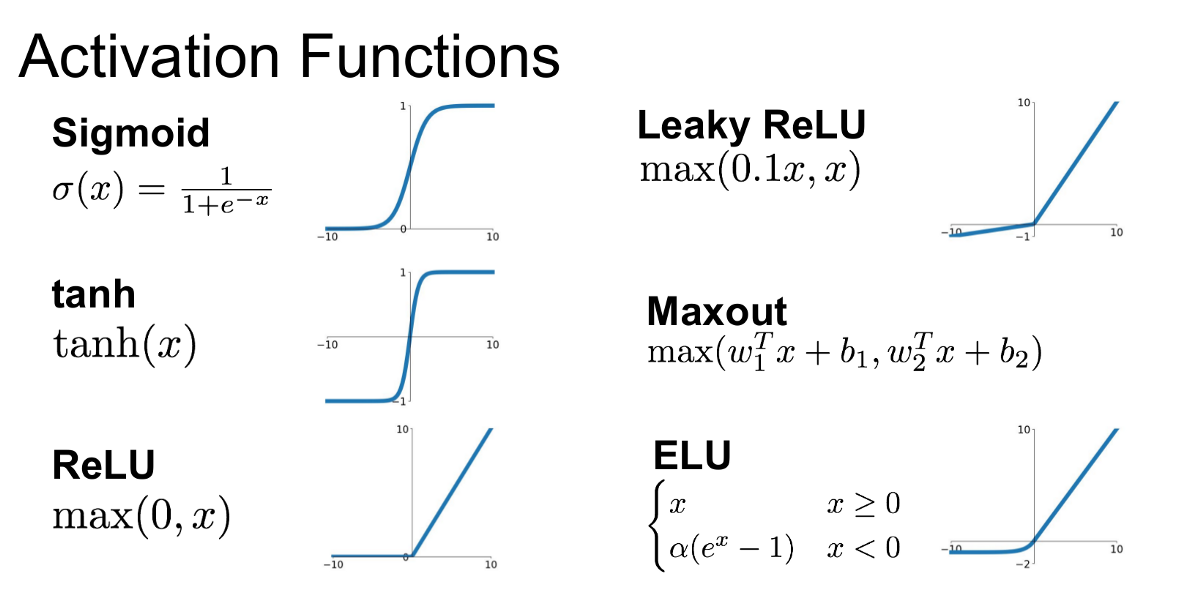

from IPython.display import Image, display

display(Image(filename='activations.png'))

For example, the \(sigmoid\) activation function will have the exact same issues, as it is a similar squashing function. Now, consider \(ReLU\), which has a completely flat region for negative input values. So, if you have a \(ReLU\) neuron, it is a pass-through if it is positive and if the pre-activation value is negative, it will just shut it off, giving an output value of \(0\). Therefore, if a neuron with a \(ReLU\) non-linearity never activates, so for any inputs you feed it from the dataset it never turns on and remains always in its flat region, then this \(ReLU\) neuron is considered a dead neuron: its weights and bias will never receive a gradient and will thus never learn, simply because the neuron never activated. And this can sometimes happen at initialization, because the weights and biases just make it so that by chance some neurons are forever dead. But it can also happen during optimization. If you have too high of a learning rate for example, sometimes you have these neurons that get too much of a gradient and get knocked out of the data manifold, resulting in no example ever activating such a neuron. Consequently, one danger with large gradient is knocking off neurons and making them forever dead. Other non-linearities such as \(leaky ReLU\) will not suffer from this issue as much, because of the lack of flat tails, as they’ll almost always yield gradients. But, to return to our \(tanh\) issue, the problem is that our \(tanh\) pre-activation hpreact values are too far away from \(0\), thus yielding a flat region activation distribution that is too saturated at the tanh flat regions, which leads to a suppression of learning for many neurons. How do we fix this? One easy way is to decrease the initial value of the w1 and b1 parameters:

parameters = define_nn(w1_factor=0.2, b1_factor=0.01, w2_factor=0.01, b2_factor=0.0)

hpreact, h, logits, _ = train(xtrain, ytrain, maxsteps=1)

plt.figure(figsize=(20, 10))

plt.imshow(h.abs() > 0.99, cmap="gray", interpolation="nearest");

11897

0/ 1: 3.3174

Now, our activations are not as saturated above 0.99 as they were before, with only a few white neurons. What is more, the activations are now more evenly distributed and the range of pre-activations is now significantly narrower:

plt.figure()

plt.hist(h.view(-1).tolist(), 50)

plt.figure()

plt.hist(hpreact.view(-1).tolist(), 50);

Since distributions look nicer now, perhaps this is what our initialization should be. Let’s now train a new network with this initialization setting and print the losses:

parameters = define_nn(w1_factor=0.2, b1_factor=0.01, w2_factor=0.01, b2_factor=0.0)

_ = train(xtrain, ytrain)

11897

0/ 200000: 3.3052

10000/ 200000: 2.6664

20000/ 200000: 2.5232

30000/ 200000: 2.0007

40000/ 200000: 1.8163

50000/ 200000: 2.1677

60000/ 200000: 2.2280

70000/ 200000: 2.5228

80000/ 200000: 2.1911

90000/ 200000: 2.4983

100000/ 200000: 2.1451

110000/ 200000: 1.7719

120000/ 200000: 1.9741

130000/ 200000: 1.6981

140000/ 200000: 1.8294

150000/ 200000: 1.8194

160000/ 200000: 2.0302

170000/ 200000: 2.0787

180000/ 200000: 1.9397

190000/ 200000: 2.0921

print_loss(xtrain, ytrain, prefix='train')

print_loss(xval, yval, prefix='val');

train 2.0368359088897705

val 2.1042771339416504

The validation loss continues to improve! This exercise clarifies the effect of good initialization on performance and emphasizes being aware of nn internals like activations and gradients. Now, we’re working with a very small network which is basically just a 1-hidden layer mlp. Because the network is so shallow, the optimization problem is quite easy and very forgiving. So, even though our initialization in the beginning was terrible, the network still learned eventually. It just got a bit of a worse result. This is not the case in general though. Once we actually start working with much deeper networks that have say 50 layers, things can get much more complicated and these problems stack up, and it is often not surprising to get into a place where a network is basically not training at all, if your initialization is bad enough. Generally, the deeper and more complex a network is, the less forgiving it is to some of the aforementioned errors. But what has worked so far with our simple example is great! However, we have come up with a bunch of magic weight and bias factors (e.g. w1_factor). How did we come up with these? And how are we supposed to set these if we have a large nn with lots and lots of layers? As you might assume, no one sets these by hand. And there’s rather principled ways of setting these scales that I’d like to introduce to you now.

Learning to set the factors#

Let’s start with a short snippet, just to begin to motivate this discussion by defining an input tensor of many multi-dimensional examples and a weight tensor of a hidden layer, both drawn from a Gaussian distribution. We’ll calculate the mean and standard deviation of these inputs and the corresponding pre-activations:

def plot_x_y_distributions(n_inputs=10, weight_factor=1.0):

x = torch.randn(1000, n_inputs) # many examples of inputs

w = torch.randn(n_inputs, 200) * weight_factor # weights of the hidden layer

y = x @ w # pre-activations

print(x.mean(), x.std())

print(y.mean(), y.std())

plt.figure(figsize=(20, 5))

plt.subplot(121)

plt.hist(x.view(-1).tolist(), 50, density=True)

plt.subplot(122)

plt.hist(y.view(-1).tolist(), 50, density=True)

plot_x_y_distributions()

tensor(-0.0020) tensor(1.0074)

tensor(0.0011) tensor(3.2319)

If you notice, the std of the pre-activations y has increased compared to that of x, as can also be seen by the widening of the Gaussian. The left Gaussian has basically undergone a stretching operation, resulting in the expanded right plot. We don’t want that. We want most of our nn to have relatively similar activations with a relatively uniform Gaussian throughout the nn. So the question becomes, how do we scale these weight vectors (e.g. w) to preserve the Gaussian distribution of the inputs (e.g. left)? Let’s do some experiments. If we scale w by a large number, e.g. \(5\):

plot_x_y_distributions(weight_factor=5)

tensor(0.0091) tensor(0.9923)

tensor(-0.0045) tensor(15.6379)

this Gaussian grows and grows in std, with the outputs in the x-axis taking on more and more extreme values (right plot). But if we scale the weights down, e.g. by \(0.2\):

plot_x_y_distributions(weight_factor=0.2)

tensor(-0.0002) tensor(1.0079)

tensor(-0.0013) tensor(0.6582)

then, conversely, the Gaussian gets smaller and smaller and it’s shrinking. Notice the std of y now being smaller than that of x. The question then becomes: what is the appropriate factor to exactly preserve the std of the inputs? And it turns out that the correct answer, mathematically, (when you work out the variance of the x @ w multiplication), is that you are supposed to divide by the square root of the fan-in. Meaning, the square root of the number of inputs. Therefore if the number of inputs is \(10\) then the appropriate factor for preserving the Gaussian distribution of the inputs is \(10^{-1/2}\).

plot_x_y_distributions(n_inputs=10, weight_factor=10**-0.5)

tensor(0.0140) tensor(1.0000)

tensor(0.0028) tensor(1.0127)

Now we see that the std remains roughly the same! Now, unsuprisingly, a number of papers have looked into how to best initialize nns and in the case of mlps, we can have these fairly deep networks that have these nonlinearities in between layers and we want to make sure that the activations are well-behaved and they don’t expand to infinity or shrink all the way to zero. And the question is, how do we initialize the weights so that they take on reasonable values throughout the network. In Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, they study convolutional nns (CNNs) and \(ReLU\) and \(PReLU\) non-linearities. But the analysis is very similar to the \(tanh\) non-linearity. As we saw previously, \(ReLU\) is somewhat of a squashing function where all the negative values are simply clamped to \(0\). Because with \(ReLU\)s half of the distribution is thrown away, they find in their analysis of the forward activations of the nn, that you have to compensate for that with a gain. They find that to initialize their weights they have to do it with a zero-mean Gaussian whose std is \(\sqrt{2/n_l}\). We just did the same, multiplying our weights by \(\sqrt{1/10}\) (the \(2\) has to do with the \(ReLU\) activation function they use). They also study the backward propagation case, finding that the backward pass is also approximately initialized up to a constant factor \(c_2/d_L\) that has to do with the number of hidden neurons in early and late layer. Now, this Kaiming initialization is also implemented in pytorch and it is probably the most common way of initializing nns now. This PyTorch method takes a mode and nonlinearity argument among others, with the latter determining the gain factor (e.g. \(\sqrt{2}\)). Why do we need a gain? For example, \(ReLU\) is a contractive transformation that squashes the output distribution by taking any negative value and clamping it to zero. \(tanh\) also squashes in some way, as it will squeeze values at the tails of its range. Therefore, in order to fight the squeezing-in of these activation functions, we have to boost the weights a little bit in order to counteract this effect and re-normalize everything back to standard unit deviation. So that’s why there’s a little bit of a gain that comes out. Now we’re actually intentionally skipping through this section quickly. The reason for that is the following. Around 2015, when this paper was written, you had to actually be extremely careful with the activations and the gradients, their ranges, their histograms, the precise setting of gains and the scrutinizing of the non-linearities and so on… So, everything was very finicky and very fragile and everything had to be very properly arranged in order to train a nn. But there are a number of modern innovations that made everything significantly more stable and well-behaved. And it has become less important to initialize these networks “exactly right”. Some of those innovations are for example: residual connections (which we will cover in the next notebooks), a number of normalization layers (e.g. batch normalization, layer normalization, group normalization) and of course much better optimizers: not just stochastic gradient descent (the simple optimizer we have been using), but slightly more complex optimizers such as RMSProp and especially Adam. All of these modern innovations make it less important for you to precisely calibrate the initialization of the nn. So, what do people do in practice? They usually initialize their weights with Kaiming-normally, like we did. Now notice how the following multiplier ends up being the std of Gaussian distribution:

multiplier = 0.2

(torch.randn(10000) * multiplier).std().item()

0.1984076201915741

But, according to the kaiming PyTorch docs, we want an std of \(\frac{gain}{\sqrt{fan\_in}}\). Therefore, for a \(tanh\) nonlinearity:

n_embd = 10

kaiming_w1_factor = (5 / 3) / ((n_embd * block_size) ** 0.5)

Now let’s re-initialize and re-train our nn with this initilization:

parameters = define_nn(

w1_factor=kaiming_w1_factor, b1_factor=0.01, w2_factor=0.01, b2_factor=0.0

)

hpreact, _, _, _ = train(xtrain, ytrain)

11897

0/ 200000: 3.3202

10000/ 200000: 2.0289

20000/ 200000: 2.2857

30000/ 200000: 1.9499

40000/ 200000: 1.8151

50000/ 200000: 2.2310

60000/ 200000: 2.1923

70000/ 200000: 1.9824

80000/ 200000: 2.1736

90000/ 200000: 2.1285

100000/ 200000: 2.0951

110000/ 200000: 1.9936

120000/ 200000: 1.8634

130000/ 200000: 2.3813

140000/ 200000: 1.7260

150000/ 200000: 1.7054

160000/ 200000: 1.8842

170000/ 200000: 2.1857

180000/ 200000: 1.7953

190000/ 200000: 1.6770

print_loss(xtrain, ytrain, prefix='train')

print_loss(xval, yval, prefix='val');

train 2.040121078491211

val 2.1033377647399902

Of course, our loss is quite similar to before. The difference now though is that we don’t need to inspect histograms and introduce arbitrary factors. On the contrary, we now have a semi-principled way to initialize our weights that is also scalable to much larger networks which we can use as a guide. However, this precise weight initialization is not as important as we might think nowadays, due to some modern innovations.

Batchnorm#

Let’s now introduce one of them. Batch Normalization (batchnorm) came out in 2015 from a team at Google in an extremely impactful paper, making it possible to train deep neural networks quite reliably. It basically just worked. Here’s what batchnorm does and how it’s implemented. Like we mentioned before, we don’t want the pre-activations (e.g. hpreact) to \(tanh\) to be too small, nor too large because then the outputs will turn out either close to \(0\) or saturated. Instead, we want the pre-activations to be roughly Gaussian (with a zero mean and a std of \(1\)), at least at initialization. So, the insight from the batchnorm paper is: ok, we have these hidden pre-activation states/values that we’d like to be Gaussian, then why not take them and just normalize them to be Gaussian? Haha. I know, it sounds kinda crazy but you can just do that, because standardizing hidden states so that they become Gaussian is a perfectly differentiable operation. And so the gist of batchnorm is that if we want unit Gaussian hidden states in our network, then we can just normalize them to be so. Let’s see how that works. If you scroll up to our definition of the forward function we can see the pre-activations hpreact before they are fed into the \(tanh\) function. Now, the idea, remember, is we are trying to make these roughly Gaussian. If the values are too small, the \(tanh\) is kind of inactive, whereas if they are very large, the \(tanh\) becomes very saturated and the gradients don’t flow. So, let’s learn how to standardize hpreact to be roughly Gaussian.

hpreact.shape

torch.Size([32, 200])

hpmean = hpreact.mean(0, keepdim=True)

hpmean.shape

torch.Size([1, 200])

hpstd = hpreact.std(0, keepdim=True)

hpstd.shape

torch.Size([1, 200])

After calculating the mean and std across batches of hpreact, which in the paper are referred to the “mini-batch mean” and “mini-batch variance”, respectively, next, following along the paper, we are going to normalize or standardize the inputs (e.g. hpreact) by subtracting the mean and dividing by the std. Basically:

hpreact = (hpreact - hpmean) / hpstd

What normalization does is that now every single neuron and its firing rate will be exactly unit Gaussian for each batch (which is why it’s called batchnorm). Now, we could in principle train using normalization. But we would not achieve a very good result. And the reason for that is that we want the pre-activations to be roughly Gaussian, but only at initialization. But we don’t want these to be forced to be Gaussian always. We’d like to allow the nn to move the distributions around, such as making them more diffuse, more sharp, perhaps to make some tanh neurons to be more trigger-happy or less trigger-happy. So we’d like this distribution to move around and we’d like the backprop to tell us how that distribution should move around. So in addition to standardization of any point in the network, we have to also introduce this additional component mentioned in the paper described as “scale and shift”. Basically, what we want to be doing is multiplying the normalized values by a gain \(\gamma\) and then addding a bias \(\beta\) to get a final output of each layer. Let’s define them:

bngain = torch.ones(1, hpreact.shape[1])

bnbias = torch.zeros(1, hpreact.shape[1])

so that:

hpreact = bngain * (hpreact - hpmean) / hpstd + bnbias

Because the gain is initialized to \(1\) and the bias to \(0\), at initialization, each neuron’s firing values in this batch will be exactly unit Gaussian and we’ll have nice numbers, regardless of what the distribution of the incoming (e.g. hpreact) tensors are. That is roughly what we want, at least during initialization. And during optimization, we’ll be able to backprop and change the gain and the bias, so the network is given the full ability to do with this whatever it wants internally. In order to train these, we have to make sure to include these in the parameters of the nn. To do so, and by effect facilitate backprop, let’s update our define_nn, forward functions accordingly:

def define_nn(

n_hidden=200, n_embd=10, w1_factor=1.0, b1_factor=1.0, w2_factor=1.0, b2_factor=1.0

):

global C, w1, b1, w2, b2, bngain, bnbias

g = torch.Generator().manual_seed(SEED)

C = torch.randn((vocab_size, n_embd), generator=g)

w1 = torch.randn(n_embd * block_size, n_hidden, generator=g) * w1_factor

b1 = torch.randn(n_hidden, generator=g) * b1_factor

w2 = torch.randn(n_hidden, vocab_size, generator=g) * w2_factor

b2 = torch.randn(vocab_size, generator=g) * b2_factor

bngain = torch.ones((1, n_hidden))

bnbias = torch.zeros((1, n_hidden))

parameters = [C, w1, b1, w2, b2, bngain, bnbias]

print(sum(p.nelement() for p in parameters))

for p in parameters:

p.requires_grad = True

return parameters

def forward(x, y):

emb = C[x]

embcat = emb.view(emb.shape[0], -1)

hpreact = embcat @ w1 + b1 # hidden layer pre-activation

# batchnorm

hpreact = (

bngain

* (hpreact - hpreact.mean(0, keepdim=True))

/ hpreact.std(0, keepdim=True)

+ bnbias

)

h = torch.tanh(hpreact)

logits = h @ w2 + b2

loss = F.cross_entropy(logits, y)

return hpreact, h, logits, loss

And now, let’s initialize our new nn and train!

parameters = define_nn(

w1_factor=kaiming_w1_factor, b1_factor=0.01, w2_factor=0.01, b2_factor=0.0

)

_ = train(xtrain, ytrain)

print_loss(xtrain, ytrain, prefix="train")

print_loss(xval, yval, prefix="val");

12297

0/ 200000: 3.3033

10000/ 200000: 2.4509

20000/ 200000: 2.2670

30000/ 200000: 2.2647

40000/ 200000: 2.2298

50000/ 200000: 1.9236

60000/ 200000: 2.1204

70000/ 200000: 2.3262

80000/ 200000: 2.1840

90000/ 200000: 1.9809

100000/ 200000: 2.2282

110000/ 200000: 2.0057

120000/ 200000: 2.0795

130000/ 200000: 1.8743

140000/ 200000: 2.2611

150000/ 200000: 1.4639

160000/ 200000: 2.2464

170000/ 200000: 2.2788

180000/ 200000: 2.3267

190000/ 200000: 1.7778

train 2.0695457458496094

val 2.1072680950164795

We get a loss that is comparable to our previous results. Here’s a rough summary of our losses (re-running this notebook might yield slightly different values, but you get the point):

# loss log

# original:

train 2.127638339996338

val 2.171938180923462

# fix softmax confidently wrong

train 2.0707266330718994

val 2.1337196826934814

# fix tanh layer saturated at init

train 2.0373899936676025

val 2.1040639877319336

# use semi-principled kaiming initialization instead of hacky way:

train 2.038806438446045

val 2.108304977416992

# add a batchnorm layer

train 2.0688135623931885

val 2.10699462890625

However, we should not actually be expecting an improvement in this case. And that’s because we are dealing with a very simple nn that has just a single hidden layer. In fact, in this very simple case of just one hidden layer, we were actually able to calculate what the scale of the weights should be to have the activations have a roughly Gaussian shape. So, batchnorm is not doing much here. But you might imagine that once you have a much deeper nn, that has lots of different types of operations and there’s also, for example, residual connections (which we’ll cover) and so on, it will become very very difficult to tune the scales of the weight matrices such that all the activations throughout the nn are roughly Gaussian: at scale, an intractable approach. Therefore, compared to that, it is much much easier to sprinkle batchnorm layers throughout the nn. In particular, it’s common to look at every single linear layer like this one hpreact = embcat @ w1 + b1 (multiply by a weight matrix and add a bias), or for example convolutions that also perform matrix multiplication, (just in a more “structured” format) and append a batchnorm layer right after it to control the scale of these activations at every point in the nn. So, we’d be adding such normalization layers throughout the nn to control the scale of these activation (again, throughout the nn) without requiring us to do perfect mathematics in order to manually control individual activation distributions for any “building block” (e.g. layer) we might want to introduce into our nn. So, batchnorm significantly stabilizes training and that’s why these layers are quite popular. Beware though, the stability offered by batchnorm often comes at a terrible cost. If you think about it, what is happening at a batchnorm layer (e.g. hpreact = bngain * (hpreact - hpreact.mean(0, keepdim=True)) / hpreact.std(0, keepdim=True) + bnbias) is something strange and terrible. Before introducing such a layer, it used to be the case that a single example was fed into the nn and then we calculated its activations and its logits in a deterministic manner in which a specific example yields specific logits. Then, for reasons of efficiency of learning, we started to use batches of examples. Those batches of examples were processed independently, but this was just an efficient thing. But now suddenly, with batchnorm, because of the normalization through the batch, we are mathematically coupling these examples in the forward pass and the backward pass of the nn. So with batchnorm, the hidden states (e.g. hpreact) and the output states (e.g. logits), are not just a function of the inputs of a specific example, but they’re also a function of all the other examples that happen to come for a ride in that batch. Damn! So what’s happening is, if you see for example the activations h = torch.tanh(hpreact), for every different example/batch, the activations are going to actually change slightly, depending on what other examples there are in the batch. Thus depending on what examples there are, h is going to jitter if you sample from many examples, since the statistics of the mean and std are going to be impacted. So, you’ll get a jitter for the h and for the logits values. And you’d think that this would be a bug or something undesirable, but in a very strange way, this actually turns out to be good in nn training as a side effect. The reason for that is that you can think of barchnorm as some kind of regularizer. Because what is happening is the you have your input and your h and because of the other examples the value of h is jittering a bit. What that does is that is effectively padding-out any one of these input examples and it’s introducing a little bit of entropy. And because of the padding-out, the jittering effect is actually kind of like a form of data augmentation, making it harder for the nn to overfit for these concrete specific examples. So, by introducing all this noise, it actually pads out the examples and it regularizes the nn. And that is the reason why, deceivingly, as a second-order effect, this is acts like a regularizer, making it harder for the us as a community to remove or do without batchnorm. Because, basically, no one likes this property that the examples in a batch are coupled mathematically in the forward pass and it leads to all kinds of strange results, bugs and so on. Therefore, people do not like these side effects so many have advocated for deprecating the use of batchnorm and move to other normalization techniques that do not couple the examples of a batch. Examples are layer normalization, instance normalization, group normalization, and so on. But basically, long story short, batchnorm is the first kind of normalization layer to be introduced, it worked extremely well, it happened to have this regularizing effect, it stabilized training and people have been trying to remove it and move unto the other normalization techniques. But it’s been hard, because it just works quite well. And some of the reason it works quite well is because of this regularizing effect and because it is quite effective at controlling the activations and their distributions. So, that’s the brief story of batchnorm. But let’s see one of the other weird outcomes of this coupling. Basically, once we’ve trained a nn, we’d like to deploy it in some kind of setting and we’d like to feed in a single individual example and get a prediction out from our nn. But how can we do that when our nn now with batchnorm in a forward pass estimates the statistics of the mean and the std of a batch and not a single example? The nn expects batches as an input now. So how do we feed in a single example and get sensible results out? The proposal in the batchnorm paper is the following. What we would like to do is implement a step after training that calculates and sets the batchnorm mean and std a single time over the training dataset. Basically, calibrate the batchnorm statistics at the end of training as such. We are going to get the training dataset and get the pre-activations for every single training example, and then one single time estimate the mean and std over the entire training set: two fixed numbers.

@torch.no_grad() # disable gradient calculation

def infer_mean_and_std_over_trainset():

# pass the entire training set through

emb = C[xtrain]

embcat = emb.view(emb.shape[0], -1)

hpreact = embcat @ w1 + b1 # hidden layer pre-activation

# measure the mean/std over the entire training set

bnmean_xtrain = hpreact.mean(dim=0, keepdim=True)

bnstd_xtrain = hpreact.std(dim=0, keepdim=True)

return bnmean_xtrain, bnstd_xtrain

bnmean_xtrain, bnstd_xtrain = infer_mean_and_std_over_trainset()

And so after calculating these values, at test time we are going to clamp them to the batchnorm calculation. To do so, let’s extend the forward and print_loss functions as such:

def forward(x, y, bnmean=None, bnstd=None):

emb = C[x]

embcat = emb.view(emb.shape[0], -1)

hpreact = embcat @ w1 + b1 # hidden layer pre-activation

# batchnorm

if bnmean is None:

bnmean = hpreact.mean(0, keepdim=True)

if bnstd is None:

bnstd = hpreact.std(0, keepdim=True)

hpreact = bngain * (hpreact - bnmean) / bnstd + bnbias

h = torch.tanh(hpreact)

logits = h @ w2 + b2

loss = F.cross_entropy(logits, y)

return hpreact, h, logits, loss

@torch.no_grad() # this decorator disables gradient tracking

def print_loss(x, y, prefix="", bnmean=None, bnstd=None):

_, _, _, loss = forward(x, y, bnmean=bnmean, bnstd=bnstd)

print(f"{prefix} {loss}")

return loss

Now, if we do an inference with these mean and std values, instead of the batch-specific ones:

print_loss(xtrain, ytrain, bnmean=bnmean_xtrain, prefix='train')

print_loss(xval, yval, bnstd=bnstd_xtrain, prefix='val');

train 2.0695457458496094

val 2.107233762741089

The losses we get may be more or less the same as our last losses before, but the benefit we have gained is that we can now forward a single example, because now the mean and std are fixed tensors. That said, because everyone is lazy, nobody wants to estimate the mean and std as a second stage after nn training. So, the batchnorm paper also introduced one more idea: that we can estimate these mean and std values in a running manner during the nn training phase. Let’s see what that would look like. First, we’ll define running value variables in the definition of our nn. Then we’ll modify the train function and calculate the running values:

def define_nn(

n_hidden=200, n_embd=10, w1_factor=1.0, b1_factor=1.0, w2_factor=1.0, b2_factor=1.0

):

global C, w1, b1, w2, b2, bngain, bnbias

g = torch.Generator().manual_seed(SEED)

C = torch.randn((vocab_size, n_embd), generator=g)

w1 = torch.randn(n_embd * block_size, n_hidden, generator=g) * w1_factor

b1 = torch.randn(n_hidden, generator=g) * b1_factor

w2 = torch.randn(n_hidden, vocab_size, generator=g) * w2_factor

b2 = torch.randn(vocab_size, generator=g) * b2_factor

bngain = torch.ones((1, n_hidden))

bnbias = torch.zeros((1, n_hidden))

bnmean_running = torch.ones((1, n_hidden))

bnstd_running = torch.zeros((1, n_hidden))

parameters = [C, w1, b1, w2, b2, bngain, bnbias]

print(sum(p.nelement() for p in parameters))

for p in parameters:

p.requires_grad = True

return bnmean_running, bnstd_running, parameters

def forward(x, y, bnmean=None, bnstd=None):

global bnmean_running, bnstd_running

emb = C[x]

embcat = emb.view(emb.shape[0], -1)

hpreact = embcat @ w1 + b1 # hidden layer pre-activation

# batchnorm

if bnmean is None:

bnmean = hpreact.mean(0, keepdim=True)

if bnstd is None:

bnstd = hpreact.std(0, keepdim=True)

hpreact = bngain * (hpreact - bnmean) / bnstd + bnbias

with torch.no_grad(): # disable gradient calculation

bnmean_running = 0.999 * bnmean_running + 0.001 * bnmean

bnstd_running = 0.999 * bnstd_running + 0.001 * bnstd

h = torch.tanh(hpreact)

logits = h @ w2 + b2

loss = F.cross_entropy(logits, y)

return hpreact, h, logits, loss

def train(x, y, initial_lr=0.1, maxsteps=200000, batchsize=32, redefine_params=False):

global parameters

lossi = []

if redefine_params:

parameters = define_nn()

for p in parameters:

p.requires_grad = True

for i in range(maxsteps):

bix = torch.randint(0, x.shape[0], (batchsize,))

xb, yb = x[bix], y[bix]

hpreact, h, logits, loss = forward(xb, yb)

backward(parameters, loss)

lr = initial_lr if i < 100000 else initial_lr / 10

update(parameters, lr=lr)

# track stats

if i % 10000 == 0: # print every once in a while

print(f"{i:7d}/{maxsteps:7d}: {loss.item():.4f}")

lossi.append(loss.log10().item())

return hpreact, h, logits, lossi

Now if we train, we will be calculating the running values of bnmean and bnstd without requiring a second step after training. This also happens when using PyTorch batchnorm layers: running values are calculated and then are used during inference. Now, let’s re-define our nn and train it.

bnmean_running, bnstd_running, parameters = define_nn(

w1_factor=kaiming_w1_factor, b1_factor=0.01, w2_factor=0.01, b2_factor=0.0

)

_ = train(xtrain, ytrain)

12297

0/ 200000: 3.3186

10000/ 200000: 2.5451

20000/ 200000: 2.1227

30000/ 200000: 2.3208

40000/ 200000: 2.3080

50000/ 200000: 2.2920

60000/ 200000: 2.1421

70000/ 200000: 2.2069

80000/ 200000: 2.3143

90000/ 200000: 1.6854

100000/ 200000: 2.1594

110000/ 200000: 2.1690

120000/ 200000: 2.2234

130000/ 200000: 2.2345

140000/ 200000: 1.8121

150000/ 200000: 2.3733

160000/ 200000: 1.7584

170000/ 200000: 2.7041

180000/ 200000: 2.0920

190000/ 200000: 1.8872

bnmean_xtrain, bnstd_xtrain = infer_mean_and_std_over_trainset()

print_loss(xtrain, ytrain, bnmean=bnmean_xtrain, prefix="train")

print_loss(xval, yval, bnstd=bnstd_xtrain, prefix="val");

train 2.06760311126709

val 2.109143018722534

If we calculate the mean over the whole training set and compare it with the running mean, we notice they are quite similar:

torch.set_printoptions(sci_mode=False)

bnmean_xtrain

tensor([[-2.6770, -0.1693, -0.6069, 0.4962, 0.7990, 0.6392, 2.3859, -1.3855,

1.1074, 1.4398, -1.2298, -2.4216, -0.5599, 0.1668, -0.2828, -0.7317,

1.0945, -1.9686, -1.1664, 0.6019, -0.1889, -0.8343, -0.6375, 0.6903,

0.6620, 0.0190, 1.1514, -0.0258, 0.3537, 1.8418, 0.2683, -0.8331,

0.5717, -0.5169, -0.0296, -1.6526, 0.7317, -0.2649, -0.1199, 0.3907,

-0.2578, -1.1813, -0.3795, 0.0315, 0.6800, -0.7146, 1.4800, -1.1142,

1.3800, 1.3333, 1.7228, -0.1832, 1.6916, 0.8562, 1.4595, -2.2924,

-0.3804, 0.4507, 1.9066, -1.4633, -0.8475, 1.2721, 0.8002, 0.2051,

2.0326, 1.2502, -1.0770, 1.3650, -0.9620, 0.4069, 0.3910, 0.6054,

0.0738, -1.3951, -2.4795, 0.1532, 1.1407, -0.4320, 0.6385, 0.3845,

0.3345, 0.9669, 1.5173, 0.5538, 0.8198, -0.2719, -0.7971, -0.3735,

2.4336, -0.6536, -1.1261, 0.8431, 0.0744, -0.9863, -1.0063, 0.1658,

0.4939, -1.2384, -0.7562, -0.8595, -0.2615, 0.1969, -1.7003, 1.0725,

1.0187, 0.2188, -0.4287, -0.2156, 0.7122, -1.0895, 1.0372, 0.1750,

0.0708, 1.3315, 2.8986, 1.5759, 1.1428, -0.4351, 0.4545, -0.2242,

-1.2595, -1.5032, 0.3134, 1.1210, -0.5699, -0.1829, 1.0623, -1.5076,

-1.3623, -0.6535, 2.5082, -0.4506, 0.7244, 1.3152, 0.9770, 0.9000,

-0.8565, 1.5871, 0.7384, 0.3593, 1.2161, 0.8446, 1.6187, 0.0483,

0.3879, 0.9822, 0.3694, -1.1177, 0.0051, 0.5489, -1.0130, 0.4538,

1.4678, 2.0332, 0.7353, -0.3556, 1.6172, -1.8053, -0.2439, 0.9442,

0.0504, -0.7963, 0.2883, -2.1548, -0.5377, -0.6621, -0.0440, -0.2134,

-2.3616, -0.7478, 0.3349, -2.1589, 0.3803, -1.2049, -0.9475, 0.7523,

1.8645, -0.7137, 1.1013, -1.0613, 1.6492, 1.3798, 0.7756, -0.9489,

-0.1432, -0.2982, -0.4837, 0.3259, 2.6390, 0.8259, 0.2949, 1.7561,

-0.7375, -0.1671, 0.7696, 1.0035, 1.2708, -0.7615, -0.1892, 1.1808]])

bnmean_running

tensor([[-2.6439, -0.1617, -0.6136, 0.4968, 0.7971, 0.6411, 2.3763, -1.3774,

1.1354, 1.4372, -1.2115, -2.3877, -0.5565, 0.1727, -0.2845, -0.7350,

1.1087, -1.9475, -1.1556, 0.6244, -0.1834, -0.8322, -0.6170, 0.6846,

0.6547, 0.0314, 1.1314, -0.0138, 0.3604, 1.8411, 0.2521, -0.8311,

0.5715, -0.5084, -0.0265, -1.6514, 0.7295, -0.2540, -0.1140, 0.4024,

-0.2471, -1.1745, -0.3745, 0.0327, 0.6927, -0.7181, 1.4660, -1.1102,

1.3809, 1.3116, 1.7163, -0.1866, 1.6785, 0.8492, 1.4503, -2.3023,

-0.3868, 0.4478, 1.8868, -1.4559, -0.8400, 1.2690, 0.7847, 0.2059,

2.0220, 1.2630, -1.0618, 1.3643, -0.9662, 0.3970, 0.3910, 0.5977,

0.0795, -1.3865, -2.4648, 0.1534, 1.1447, -0.4265, 0.6496, 0.4061,

0.3352, 0.9816, 1.5135, 0.5539, 0.8205, -0.2807, -0.8094, -0.3802,

2.4052, -0.6548, -1.1304, 0.8636, 0.0803, -0.9660, -1.0143, 0.1948,

0.5108, -1.2296, -0.7239, -0.8933, -0.2621, 0.2005, -1.6910, 1.0689,

1.0074, 0.2274, -0.4184, -0.2276, 0.7187, -1.0911, 1.0455, 0.1610,

0.0798, 1.3174, 2.9053, 1.5687, 1.1363, -0.4269, 0.4681, -0.2275,

-1.2518, -1.5101, 0.3332, 1.1115, -0.5765, -0.1845, 1.0573, -1.5042,

-1.3581, -0.6503, 2.4976, -0.4533, 0.7215, 1.3105, 0.9769, 0.8943,

-0.8746, 1.5900, 0.7509, 0.3625, 1.2261, 0.8343, 1.6215, 0.0652,

0.3875, 1.0001, 0.3721, -1.1022, -0.0122, 0.5340, -1.0139, 0.4521,

1.4687, 2.0395, 0.7374, -0.3479, 1.6156, -1.7936, -0.2443, 0.9528,

0.0623, -0.7957, 0.2997, -2.1441, -0.5244, -0.6628, -0.0482, -0.1997,

-2.3657, -0.7569, 0.3328, -2.1407, 0.3706, -1.2124, -0.9397, 0.7629,

1.8574, -0.7038, 1.1025, -1.0656, 1.6602, 1.3632, 0.7866, -0.9584,

-0.1377, -0.2888, -0.4677, 0.3213, 2.6165, 0.8160, 0.2933, 1.7396,

-0.7484, -0.1775, 0.7626, 1.0181, 1.2647, -0.7750, -0.1872, 1.1865]])

Similarly:

bnstd_xtrain

tensor([[2.3470, 2.1151, 2.1772, 2.0503, 2.2926, 2.3693, 2.1253, 2.5290, 2.3184,

2.1632, 2.2796, 2.2958, 2.1392, 2.3227, 2.0948, 2.6618, 2.3286, 1.9229,

2.2178, 2.6903, 2.2699, 2.4215, 2.1179, 2.0361, 2.0093, 1.8318, 2.1781,

2.3840, 2.2855, 2.4143, 1.6977, 1.7918, 2.0156, 2.0423, 1.9195, 1.7332,

2.1424, 2.2936, 1.8010, 1.8278, 2.2029, 2.1118, 2.3197, 1.7458, 2.3419,

1.9642, 2.1103, 2.4709, 2.0671, 2.4320, 2.0708, 1.6809, 2.0100, 1.8361,

2.4507, 2.2494, 1.9286, 2.2677, 2.6837, 1.9560, 2.2003, 2.0271, 1.9214,

2.2211, 2.3771, 2.3485, 1.9970, 2.1871, 2.1062, 2.1121, 1.9403, 2.0357,

2.0631, 2.1141, 2.0321, 1.4313, 2.3739, 2.3750, 1.7508, 2.3588, 1.9391,

2.0428, 1.9524, 2.1434, 2.4703, 2.3452, 2.1779, 2.3140, 2.5282, 2.6035,

2.0373, 1.9570, 2.4558, 1.9520, 2.0133, 2.3092, 2.0963, 1.9307, 2.1936,

2.0732, 2.2833, 1.9115, 2.1663, 2.0248, 1.7752, 2.3723, 2.0549, 2.2011,

1.9060, 2.1103, 2.3679, 2.2174, 2.3703, 2.4740, 2.7726, 2.4209, 1.8527,

1.9249, 1.9286, 2.1562, 2.1385, 2.1264, 2.0558, 2.0809, 1.9615, 2.0763,

2.0409, 2.3690, 1.8694, 2.3763, 2.0429, 2.6295, 2.1289, 1.8621, 1.9486,

2.1482, 2.2445, 3.0612, 1.9641, 1.9791, 2.0894, 1.7463, 2.1585, 1.9308,

1.9275, 2.3182, 2.3112, 2.1799, 1.9871, 1.7467, 1.7394, 2.1327, 2.0271,

2.2697, 2.1686, 2.1339, 1.9851, 1.8926, 1.8833, 1.9115, 2.2966, 1.9413,

2.1535, 2.2445, 2.2070, 1.6808, 2.2534, 1.7394, 1.9822, 2.1503, 1.9299,

2.2190, 2.2701, 2.1555, 2.3559, 2.0457, 2.2009, 2.0695, 2.2631, 1.9018,

2.4972, 2.1612, 2.2842, 1.8935, 2.0535, 2.2222, 2.0146, 2.2677, 2.3287,

2.1751, 2.2328, 2.1815, 2.0942, 1.8494, 2.1692, 2.1498, 2.0431, 2.6586,

2.3651, 1.8138]])

bnstd_running

tensor([[2.3397, 2.1127, 2.1607, 2.0195, 2.2593, 2.3516, 2.1103, 2.5075, 2.3009,

2.1339, 2.2692, 2.2746, 2.1203, 2.3036, 2.0772, 2.6334, 2.3094, 1.9119,

2.1908, 2.6739, 2.2396, 2.3972, 2.0972, 2.0194, 1.9913, 1.8106, 2.1576,

2.3601, 2.2604, 2.4005, 1.6662, 1.7701, 1.9929, 2.0250, 1.9011, 1.7245,

2.1089, 2.2855, 1.7875, 1.8095, 2.1857, 2.0851, 2.2970, 1.7297, 2.3121,

1.9505, 2.0847, 2.4411, 2.0630, 2.4087, 2.0420, 1.6596, 1.9859, 1.8215,

2.4230, 2.2367, 1.9207, 2.2545, 2.6714, 1.9398, 2.1691, 2.0143, 1.9043,

2.1866, 2.3438, 2.3331, 1.9744, 2.1716, 2.0918, 2.0947, 1.9186, 2.0143,

2.0387, 2.0704, 2.0176, 1.4192, 2.3597, 2.3436, 1.7193, 2.3276, 1.9210,

2.0164, 1.9422, 2.1131, 2.4389, 2.3320, 2.1649, 2.2978, 2.5055, 2.5902,

2.0084, 1.9485, 2.4278, 1.9296, 2.0035, 2.2858, 2.0765, 1.9142, 2.1631,

2.0530, 2.2614, 1.9054, 2.1492, 2.0110, 1.7607, 2.3342, 2.0385, 2.1851,

1.8868, 2.0890, 2.3435, 2.1972, 2.3476, 2.4317, 2.7641, 2.3959, 1.8332,

1.9081, 1.9148, 2.1321, 2.1238, 2.1028, 2.0405, 2.0542, 1.9374, 2.0692,

2.0270, 2.3360, 1.8488, 2.3442, 2.0212, 2.6016, 2.1188, 1.8529, 1.9363,

2.1399, 2.2261, 3.0514, 1.9437, 1.9584, 2.0580, 1.7288, 2.1423, 1.8827,

1.9192, 2.3113, 2.2884, 2.1547, 1.9679, 1.7264, 1.7157, 2.1096, 2.0085,

2.2409, 2.1413, 2.1120, 1.9617, 1.8712, 1.8732, 1.8953, 2.2746, 1.9251,

2.1248, 2.2261, 2.1831, 1.6496, 2.2212, 1.7222, 1.9535, 2.1321, 1.9156,

2.1960, 2.2431, 2.1481, 2.3300, 2.0081, 2.1739, 2.0539, 2.2523, 1.8856,

2.4582, 2.1390, 2.2654, 1.8744, 2.0369, 2.1935, 2.0023, 2.2502, 2.3119,

2.1646, 2.2128, 2.1717, 2.0855, 1.8304, 2.1477, 2.1137, 2.0246, 2.6384,

2.3380, 1.7943]])

Therefore, we can easily infer the loss using the running values:

print_loss(xtrain, ytrain, bnmean=bnmean_running, prefix="train")

print_loss(xval, yval, bnstd=bnstd_running, prefix="val");

train 2.0676608085632324

val 2.1091856956481934

And the resulting losses are basically identical. So, calculating running mean and std values eliminates the need for calculating them in a second step after training. Ok, so we are almost done with batchnorm. There are two more notes to make. First, is that we skipped the discussion of what the \(\epsilon\) term is that is added to the normalization step’s denominator square root. It is usually a small, fixed number (e.g. 1e-05) by default. What this number does is that it prevents a division by \(0\) in the case that the variance over the batch is exactly \(0\). We could add it in our example and feel free to, but we are just going to skip it since a \(0\) variance is very very unlikely in our very simple example. Second note is that we are being wasteful with b1 in forward(). There, we are first adding b1 to embcat @ w1 to calculate hpreact, but then, within the batchnorm layer, we are normalizing by subtracting the pre-activation mean (that contains b1), which basically subtracts b1 out, rendering it redundant:

...

hpreact = embcat @ w1 + b1 # hidden layer pre-activation

# batchnorm

if bnmean is None:

bnmean = hpreact.mean(0, keepdim=True)

if bnstd is None:

bnstd = hpreact.std(0, keepdim=True)

hpreact = bngain * (hpreact - bnmean) / bnstd + bnbias

...

Since it is being subtracted out, as a parameter it is neither contributing to the nn training or inference nor is it being optimized. If we inspect it’s gradient attribute, it is zero:

print(b1.grad)

torch.testing.assert_close(b1.grad, torch.zeros(b1.shape))

tensor([ 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

0.0000, -0.0000, 0.0000, -0.0000, 0.0000,

0.0000, -0.0000, -0.0000, 0.0000, -0.0000,

-0.0000, -0.0000, 0.0000, 0.0000, -0.0000,

0.0000, 0.0000, 0.0000, -0.0000, -0.0000,

-0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

-0.0000, 0.0000, 0.0000, 0.0000, -0.0000,

-0.0000, 0.0000, 0.0000, -0.0000, -0.0000,

0.0000, -0.0000, 0.0000, 0.0000, -0.0000,

0.0000, -0.0000, -0.0000, -0.0000, 0.0000,

-0.0000, 0.0000, -0.0000, 0.0000, 0.0000,

-0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, -0.0000, 0.0000, -0.0000, -0.0000,

0.0000, 0.0000, 0.0000, -0.0000, -0.0000,

-0.0000, -0.0000, 0.0000, 0.0000, 0.0000,

0.0000, -0.0000, 0.0000, -0.0000, -0.0000,

-0.0000, 0.0000, -0.0000, 0.0000, -0.0000,

-0.0000, 0.0000, 0.0000, -0.0000, -0.0000,

0.0000, -0.0000, -0.0000, -0.0000, -0.0000,

0.0000, -0.0000, -0.0000, 0.0000, -0.0000,

0.0000, 0.0000, -0.0000, 0.0000, -0.0000,

0.0000, 0.0000, 0.0000, 0.0000, -0.0000,

0.0000, -0.0000, 0.0000, -0.0000, 0.0000,

-0.0000, 0.0000, -0.0000, 0.0000, 0.0000,

-0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

-0.0000, 0.0000, 0.0000, 0.0000, -0.0000,

-0.0000, -0.0000, 0.0000, -0.0000, 0.0000,

-0.0000, -0.0000, -0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, -0.0000,

-0.0000, 0.0000, 0.0000, 0.0000, -0.0000,

0.0000, -0.0000, -0.0000, 0.0000, 0.0000,

-0.0000, -0.0000, -0.0000, -0.0000, -0.0000,

0.0000, -0.0000, 0.0000, -0.0000, 0.0000,

-0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

-0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, -0.0000, 0.0000, -0.0000, 0.0000,

-0.0000, -0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, -0.0000, 0.0000, -0.0000])

Therefore, whenever using batchnorm, then if you have any layers with weights before it, like a linear layer or a convolutional layer or something like that, you are better off disabling the bias parameter for that layer, since we have the batchnorm bias (e.g. bnbias in our case) which compensates for it. To sum up this point: batchnorm has its own bias and thus there’s no need to have a bias in the layer before it, because that bias is going to be subtracted out anyway. So that’s the other small detail to be careful of sometimes. Of course, keeping a unnecessary bias in a layer is not going to do anything catastrophic but it is not going to be doing anything and is just wasteful, so it is better to remove it. Therefore, let’s deprecate b1, the first layer bias, from our network and add some nice comments:

def define_nn(

n_hidden=200, n_embd=10, w1_factor=1.0, b1_factor=1.0, w2_factor=1.0, b2_factor=1.0

):

global C, w1, w2, b2, bngain, bnbias

g = torch.Generator().manual_seed(SEED)

C = torch.randn((vocab_size, n_embd), generator=g)

w1 = torch.randn(n_embd * block_size, n_hidden, generator=g) * w1_factor

w2 = torch.randn(n_hidden, vocab_size, generator=g) * w2_factor

b2 = torch.randn(vocab_size, generator=g) * b2_factor

# batchnorm layer parameters

bngain = torch.ones((1, n_hidden))

bnbias = torch.zeros((1, n_hidden))

bnmean_running = torch.ones((1, n_hidden))

bnstd_running = torch.zeros((1, n_hidden))

parameters = [C, w1, w2, b2, bngain, bnbias]

print(sum(p.nelement() for p in parameters))

for p in parameters:

p.requires_grad = True

return bnmean_running, bnstd_running, parameters

def forward(x, y, bnmean=None, bnstd=None):

global bnmean_running, bnstd_running

emb = C[x]

embcat = emb.view(emb.shape[0], -1)

# linear layer

hpreact = embcat @ w1 # hidden layer pre-activation

# batchnorm layer

if bnmean is None:

bnmean = hpreact.mean(0, keepdim=True)

if bnstd is None:

bnstd = hpreact.std(0, keepdim=True)

hpreact = bngain * (hpreact - bnmean) / bnstd + bnbias

with torch.no_grad(): # disable gradient calculation

bnmean_running = 0.999 * bnmean_running + 0.001 * bnmean

bnstd_running = 0.999 * bnstd_running + 0.001 * bnstd

# non-linearity

h = torch.tanh(hpreact) # hidden layer

logits = h @ w2 + b2 # output layer

loss = F.cross_entropy(logits, y) # loss function

return bnmean, bnstd, hpreact, h, logits, loss

For a final sum up: we use batchnorm to control the statistics of activations in a nn. It is common to sprinkle batchnorm layers across the nn and usually we will place it after layers that have multiplications (linear, convolutional, etc.). Internally, batchnorm has parameters for the gain (e.g. bngain) and the bias (e.g. bnbias). And these are trained using backprop. It also has two buffers. These are the running mean and the running mean of the std, which are not trained using backprop but which are updated during, and finally calculated after, training. So, what batchnorm does is it calculates the batch mean and std of the activations that are feeding into batchnorm layer, then it’s centering that batch to be unit Gaussian and then it’s offsetting and scaling it by the learned bias (e.g. bnbias) and gain (e.g. bngain). And then, on top of that, it’s keeping track of the mean and std of the inputs, which are then used during inference. In addition, this allows us to forward individual examples during test time. So, that’s the batchnorm layer, which is a fairly complicated layer, but this is a simple example of what it’s doing internally. Now, we are going to go through a real example.

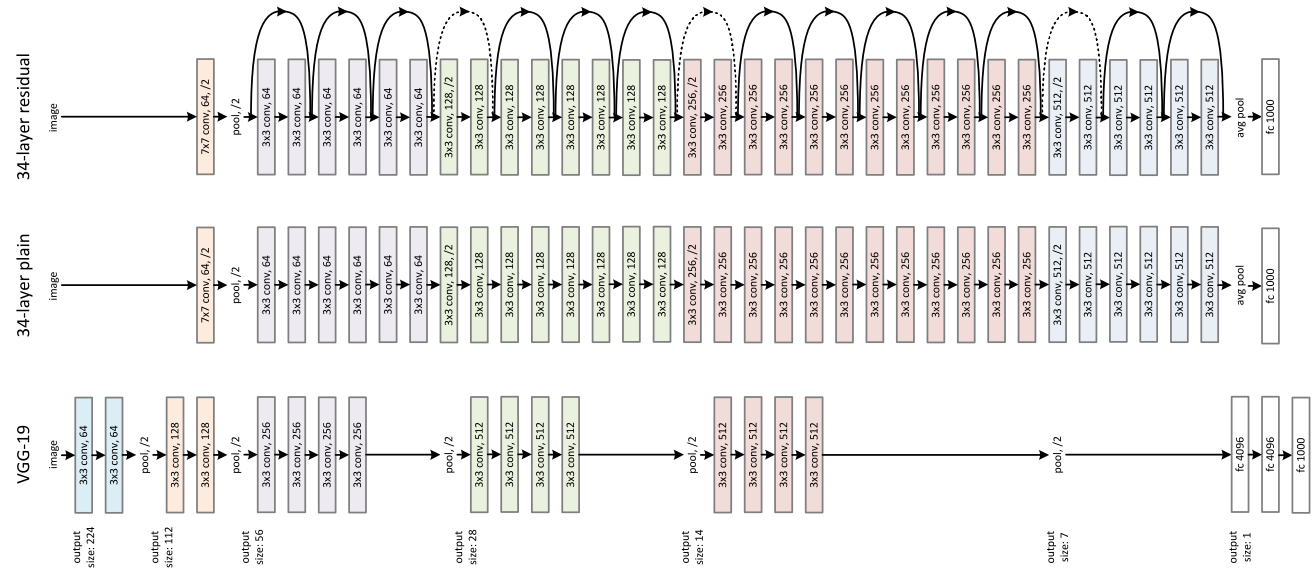

ResNet#

residual nns ( resnets) are common types of nns used for image classification. Although we haven’t yet nor will we be covering or explaining all the pieces of these networks in detail, it is still worth noting that an image basically feeds into a resnet, and there are many many layers with repeating structure all the way to the output layer the gives us the predictions (e.g. what is inside the input image).

from IPython.display import Image, display

display(Image(filename="resnet.png"))

resnets (top network in the above image) are a repeating structure made up of blocks that are sequentially stacked-up. In PyTorch, each such block is defined as a Bottleneck object:

class Bottleneck(nn.Module):

# Bottleneck in torchvision places the stride for downsampling at 3x3 convolution(self.conv2)

# while original implementation places the stride at the first 1x1 convolution(self.conv1)

# according to "Deep residual learning for image recognition" https://arxiv.org/abs/1512.03385.

# This variant is also known as ResNet V1.5 and improves accuracy according to

# https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch.

expansion: int = 4

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

width = int(planes * (base_width / 64.0)) * groups

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv1x1(inplanes, width)

self.bn1 = norm_layer(width)

self.conv2 = conv3x3(width, width, stride, groups, dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1x1(width, planes * self.expansion)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

Although we haven’t covered all the components of the above pytorch snippet (e.g. CNNs), let’s point out some small pieces of it. The constructor, __init__, basically initializes the nn, similarly to our define_nn function. And, similarly to our forward function, the Bottleneck.forward method specifies how the nn acts for a given input x. Now, if you initialize a bunch of Bottleneck blocks and stack them up serially, you get a resnet. Notice what is happening here. We have convolutional layers (e.g. conv1x1, conv3x3). These are the same thing as a linear layer, except that convolutional layers are used for images and so they have spatial structure. What this means is that the linear multiplication and bias offset (e.g. logits = h @ w2 + b2) are done on overlapping patches, or parts, of the input, instead on the full input (since the images have spatial structure). Otherwise though, convolutional layers basically do an wx + b type of operation. Then, we have a norm layer (e.g. bn1), which is initialized to be a 2D batchnorm layer (BatchNorm2d). And then, there is a relu non-linearity. We have used \(tanh\) so far, but these are both common non-linearities that can be used relatively interchangeably. But for very deep networks, \(ReLU\) typically and empirically works a bit better. And in the Bottleneck.forward method, you’ll notice the following pattern: conv layer -> batchnorm layer -> relu, repeated three times. This however is basically almost exactly the same pattern employed in our forward function: linear layer -> batchnorm layer -> tanh. And that’s the motif that you would be stacking up when you would be creating these deep nns that are called resnets. Now, if you dig deeper into the PyTorch resnet implementation, you’ll notice that in the functions that return a convolutional layer, e.g. conv1x1:

def conv1x1(in_planes: int, out_planes: int, stride: int = 1) -> nn.Conv2d:

"""1x1 convolution"""

return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)

Interim summary#